Shaping Recycling Logistics

Table of Contents

From Spreadsheets to Software

Imagine a daily ritual involving exporting CSV files from various WordPress portals – all containing multiple files per portal, for orders and returns These CSVs were then fed into a series of VBScripts, which would dutifully copy data to a "log file" (our ever reliable, source of truth.), and then into a sprawling Excel sheet named "TEMP."

This "TEMP" sheet was where the real fun began. Manual data sanitisation needed to be performed on unchecked user input. Postcodes had to be checked, quantities verified, and potential duplicates flagged. Any job that looked suspicious, or simply didn't fit the mould, was manually placed into a "QUERY" state. This was our human-powered error detection system, and it worked, but at the cost of countless hours and the occasional existential crisis.

Once the data was "clean" (or at least, clean enough not to upset our courier service), another script would move it to a "MASTER" spreadsheet. The Master spreadsheet was where admin staff worked on following the collections and orders through to completion, ensuring that any issues with collections are resolved, communicating with both service user and courier to get correct collection instructions etc. Weekly, another VBScript would churn out PDF waste transfer notes, and then, finally, the completed entries were manually archived. It was a process that worked, but it was fragile, prone to human error, and certainly not scalable.

Building a Robust Foundation

My goal was clear: eliminate the spreadsheets, banish the VBScripts, and automate as much as possible. I selected a secure and scalable stack: Spring Boot (v3.5.3) with Java (OpenJDK v21 LTS) for the backend, Vaadin (v24.8.0) for server-side rendered frontend, and MySQL (v8.4 LTS) as our new, authoritative data store.

Architectural Pillars

A key architectural decision was to centralise all database logic within Spring

service classes (@Service). This ensures that my Vaadin views remain lean and

focused on presentation, never directly interacting with the database. This

separation of concerns not only improves maintainability but also lays the

foundation for future scalability and API integrations.

The Data Backbone

I designed a normalised relational schema to replace the flat-file chaos. This

includes dedicated tables for programs (our recycling initiatives),

customers (the account holders), addresses (allowing multiple sites per

customer), deliveries, collections, and job_queries (our new, structured

way to flag issues). This structured approach is fundamental to automating our

processes and providing accurate analytics.

Algorithms and Smooth Interfaces

The transition wasn't just about moving data; it was about embedding intelligence and significantly improving the user experience.

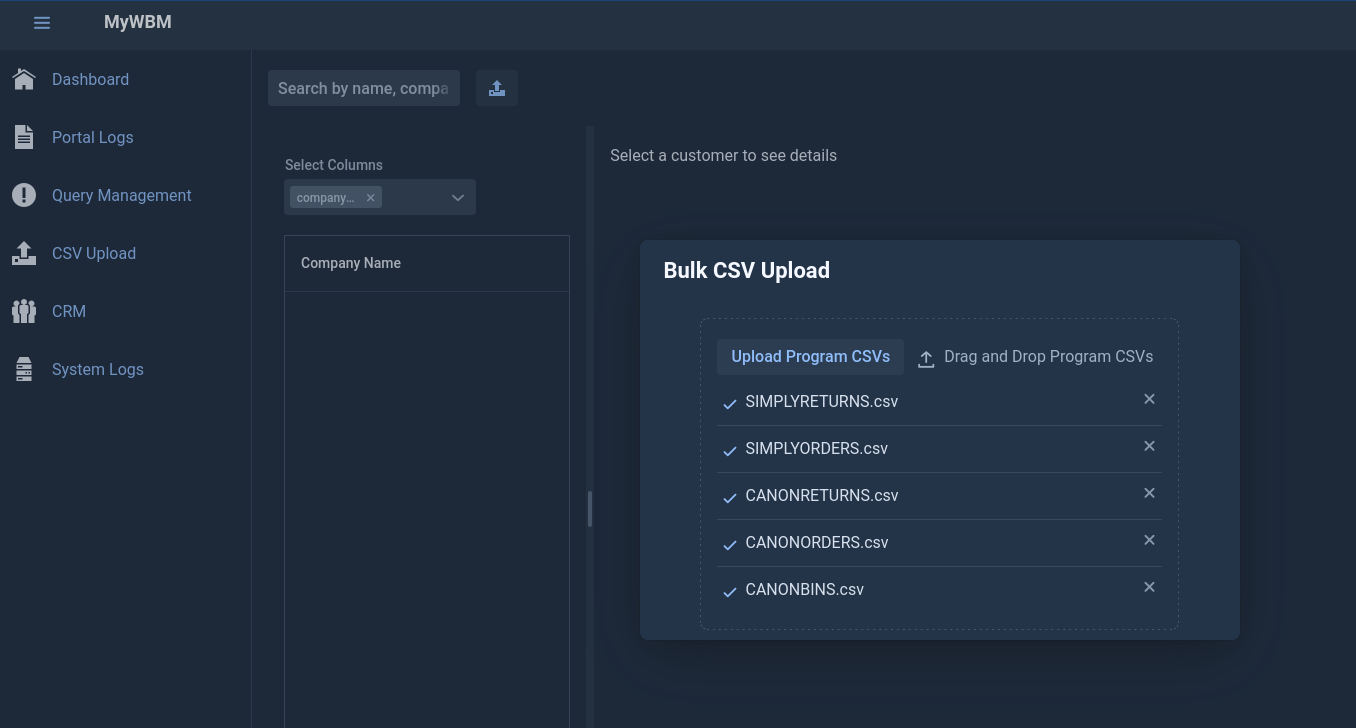

Automated Ingestion: Taming the CSV Beast

One of the first challenges was automating the CSV ingestion. My new system

allows for staff to upload multiple CSV's at once and letting the algorithm figure

out which program the data belongs to and shoving it in the right place,

performing automated data sanitisation and validation, including postcode checks

and business rule flagging. Jobs that require manual review are automatically

placed into a QUERY state, complete with a detailed JobQuery entry.

Optimising File Uploads

Initially, my approach to file uploads was straightforward: process each file sequentially, performing all validation and database operations within the same request. While this worked for smaller datasets, it quickly became a bottleneck. With over 12,000 records to manage, within a multi-tenant application a slow upload process could easily consume server resources, leading to a sluggish experience for everyone. Oh, didn't I mention? we are testing this on a server with only 2GB of RAM and 2 vCPU's! The server is also handling other services, such as this lovely article you are reading!

The need for optimisation was clear. I needed a solution that could handle large files efficiently, provide real-time feedback to the user, and avoid tying up server resources during lengthy processing. My solution involved decoupling the upload from the processing.

Now, when a user uploads a CSV, the file is first received and queued. A progress bar provides immediate visual feedback, assuring the user that their upload is being handled. The actual parsing and database operations are then performed sequentially for each file in the queue. While the processing itself is not yet fully asynchronous with real-time UI updates, the decoupling of the upload from the processing, combined with a significantly optimised duplicate checking mechanism, dramatically improves performance.

Previously, when re-importing files that might contain existing entries, the system would read each line from the CSV and then query the database to check for duplicates. This resulted in an inefficient N+1 query problem, where N is the number of records in the CSV. For large files, this was a major performance bottleneck.

My optimised approach now involves pre-fetching all existing WordPress IDs

(unique identifiers for jobs from the old system) for a given program into a

HashSet before processing any new CSV data. This allows for near

constant-time (O(1) on average) lookup for duplicates for each incoming record.

The speed of processing is now primarily dependent on the number of new

entries, rather than the total number of entries in the CSV or the existing

records in the database.

Set<Long> existingWpIds = new HashSet<>();

if(JOB_TYPE_DELIVERY.equalsIgnoreCase(jobType)) {

existingWpIds.addAll(crmService.getDeliveryRepository().findAllWpIdsByProgram(program));

} else if(JOB_TYPE_COLLECTION.equalsIgnoreCase(jobType) || JOB_TYPE_BIN.equalsIgnoreCase(jobType)

|| JOB_TYPE_PALLET.equalsIgnoreCase(jobType)) {

existingWpIds.addAll(crmService.getCollectionRepository().findAllWpIdsByProgram(program));

}

/* ... Later in the processing loop ... */

if(existingWpIds.contains(wpId)) {

problems.add("Duplicate entry found for wpId: " + wpId);

hasProblem = true;

}

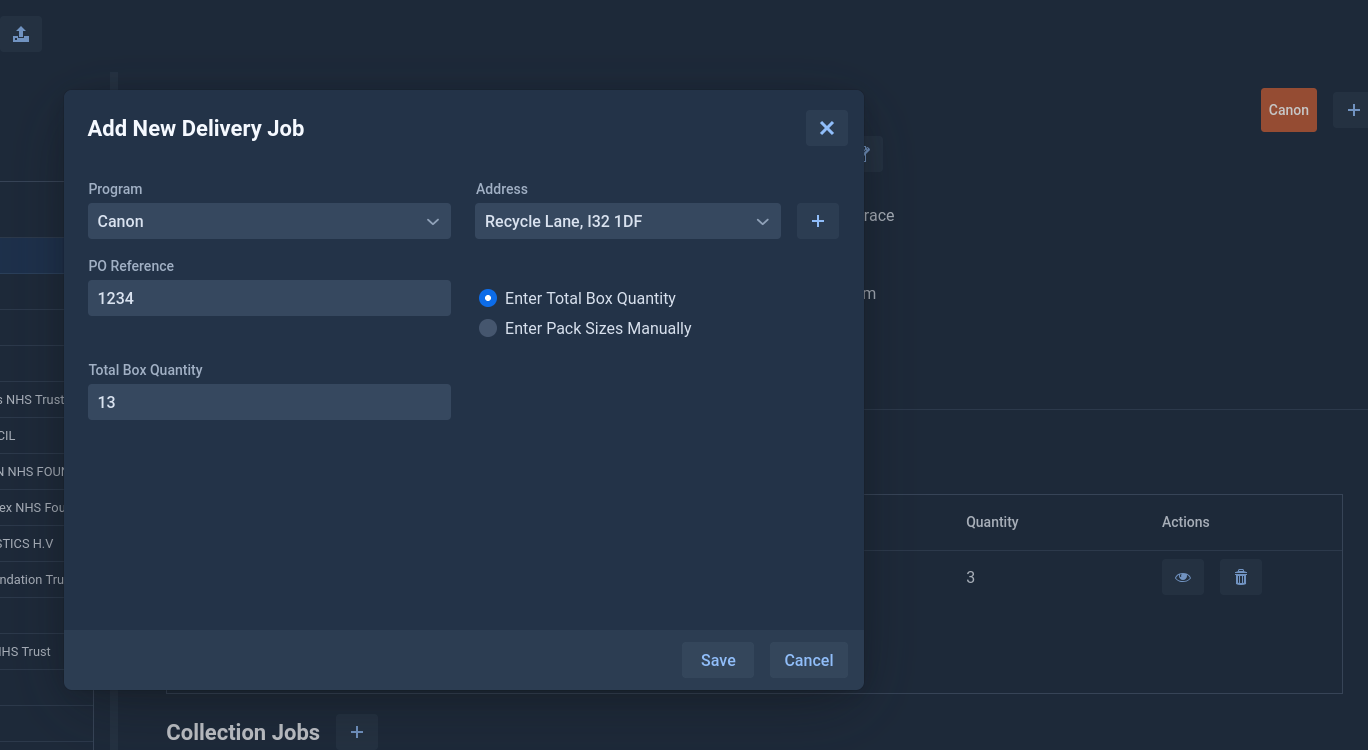

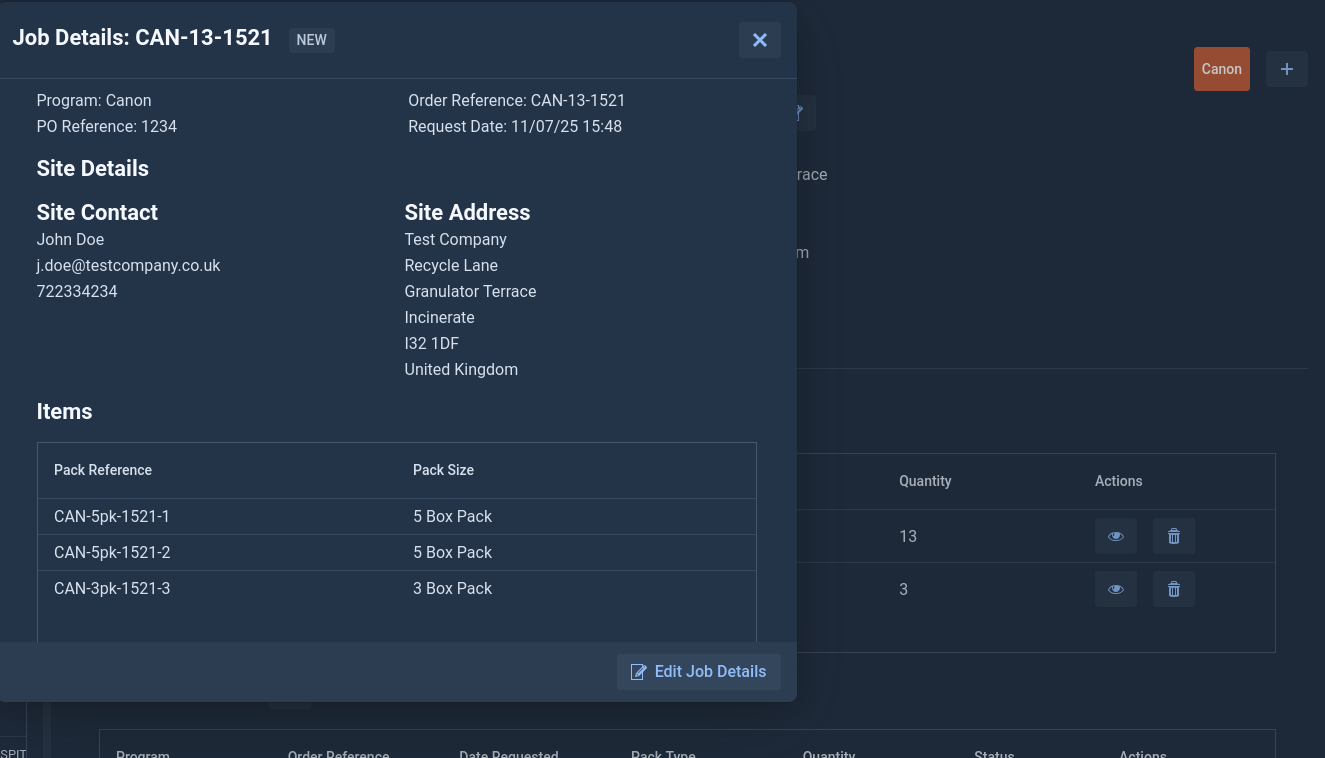

The Pack Distribution Puzzle

A particularly fun challenge was optimising the distribution of boxes into various pack sizes for deliveries. Given a total number of boxes, how do you determine the most efficient combination of available pack sizes (e.g., 3-box, 6-box, 5-box packs)? This isn't just about fitting; it's about cost-efficiency and minimising the number of individual packs along with ensuring minimal manual labour effort for warehouse staff.

I went with a dynamic programming approach, encapsulated within my

PackDistributionService. This algorithm calculates the optimal combination by

considering the "cost" (a weighted value representing efficiency) of each pack

size.

@Service

public class PackDistributionService {

/* Defines a "cost" for each pack size, influencing the optimisation. */

/* Lower cost implies higher preference in the distribution. */

private int getPackCost(int boxQuantity) {

if(boxQuantity == 3) return 2; /* Base unit, efficient. */

if(boxQuantity == 6) return 3; /* More efficient than two 3-packs (2*2=4), encouraging its use. */

if(boxQuantity == 5) return 3; /* Less preferred for certain combinations. */

if(boxQuantity == 2) return 6; /* Less efficient. */

if(boxQuantity == 1) return 10; /* Least efficient. */

if(boxQuantity == 4) return 7; /* More efficient than two 2-packs. */

return Integer.MAX_VALUE; /* Should not happen for valid pack sizes. */

}

/**

,* Distributes a total number of boxes into an optimal combination of pack sizes,

,* prioritising based on a defined scoring system.

,*

,* @param totalBoxes The total number of boxes to distribute.

,* @param availablePackSizes A list of all available PackSize entities.

,* @return A Map where keys are PackSize entities and values are the quantity of that pack size.

,*/

public Map<PackSize, Integer> distributePacks(int totalBoxes, List<PackSize> availablePackSizes) {

/* Sort pack sizes by box quantity in ascending order for DP. */

availablePackSizes.sort((ps1, ps2) -> Integer.compare(ps1.getBoxQuantity(), ps2.getBoxQuantity()));

/* dp[i] stores a SimpleEntry: key = min_total_cost, value = min_num_packs. */

SimpleEntry<Integer, Integer>[] dp = new SimpleEntry[totalBoxes + 1];

/* parent[i] stores the PackSize used to reach 'i' boxes from a previous state. */

PackSize[] parent = new PackSize[totalBoxes + 1];

/* Initialise dp array. */

for(int i = 1; i <= totalBoxes; i++) {

dp[i] = new SimpleEntry<>(Integer.MAX_VALUE, Integer.MAX_VALUE); /* Max cost, Max packs. */

}

dp[0] = new SimpleEntry<>(0, 0); /* 0 cost, 0 packs for 0 boxes. */

for(int i = 1; i <= totalBoxes; i++) {

for(PackSize packSize : availablePackSizes) {

int currentPackBoxQty = packSize.getBoxQuantity();

int currentPackCost = getPackCost(currentPackBoxQty);

if(currentPackBoxQty <= i) {

SimpleEntry<Integer, Integer> prevDp = dp[i - currentPackBoxQty];

if(prevDp.getKey() != Integer.MAX_VALUE) { /* Check if previous state is reachable. */

int newTotalCost = prevDp.getKey() + currentPackCost;

int newNumPacks = prevDp.getValue() + 1;

/* Prioritise lower total cost, then fewer packs. */

if(newTotalCost < dp[i].getKey() || (newTotalCost == dp[i].getKey() && newNumPacks < dp[i].getValue())) {

dp[i] = new SimpleEntry<>(newTotalCost, newNumPacks);

parent[i] = packSize;

}

}

}

}

}

Map<PackSize, Integer> result = new HashMap<>();

int currentBoxes = totalBoxes;

/* Check if a solution was found. */

if(dp[totalBoxes].getKey() == Integer.MAX_VALUE) {

LOGGER.warning("No exact distribution found for " + totalBoxes + " boxes with available pack sizes.");

return result;

}

while(currentBoxes > 0 && parent[currentBoxes] != null) {

PackSize usedPack = parent[currentBoxes];

result.put(usedPack, result.getOrDefault(usedPack, 0) + 1);

currentBoxes -= usedPack.getBoxQuantity();

}

if(currentBoxes != 0) {

LOGGER.warning("Could not perfectly reconstruct distribution for " + totalBoxes + " boxes. Remaining: " + currentBoxes);

if (currentBoxes > 0 && !availablePackSizes.isEmpty()) {

PackSize smallestPack = availablePackSizes.stream()

.min((ps1, ps2) -> Integer.compare(ps1.getBoxQuantity(),

ps2.getBoxQuantity()))

.orElse(null);

if(smallestPack != null) {

result.put(smallestPack, result.getOrDefault(smallestPack, 0) +

(currentBoxes + smallestPack.getBoxQuantity() - 1) /

smallestPack.getBoxQuantity());

}

}

}

return result;

}

}

This dynamic programming approach ensures that for any given total number of boxes, I find the most "cost-effective" way to combine available pack sizes. It's a small piece of algorithmic magic that saves a lot of manual calculation and ensures optimal resource utilisation.

User Experience

Beyond the backend magic, I've also focused on refining the user interface. Simple but impactful changes, like ensuring all dialogs can be closed with the `ESC` key and that primary action buttons respond to the `Enter` key, significantly improve the fluidity of the user experience. These might seem like minor details, but they add up to a much more intuitive and efficient interaction for staff.

What's Next?

This application is an ongoing project. My immediate focus is on integrating the dataset for our final partner program and implementing its complex business logic which involves nuanced account management (partner numbers, dealer codes, and direct users). This will further reduce manual intervention and streamline our operations.

Looking further ahead, my plans include:

- Automated Courier Integration: Direct API integration with our courier

providers to automate shipping label generation and tracking updates.

- Advanced Query Management: Improve the query management system with more

sophisticated workflows and reporting.

- Automated Billing System: Integrating with our accounting systems to

automate the billing process for chargeable services.

- Enhanced Analytics: Leveraging the structured data in MySQL to

provide deeper insights into our operations, enabling data-driven decision-making.

For me, this is more than just a software project; it's a commitment to efficiency, accuracy, and a future where our team can focus on what they do best: providing excellent recycling services, rather than wrestling with spreadsheets and fixing typing errors from user input.

Conclusion

The effort in creating a system that moves from a manual, error-prone workflow to an automated, "intelligent" system has come with some challenges, but it's been incredibly rewarding so far. I view it as a testament to how thoughtful software engineering has the ability transform business operations, one line of code (and one less spreadsheet) at a time.

- Happy Hacking!